Cache has emerged as a pivotal technology, silently residing in the background of our digital realm, enabling lightning-fast access to frequently used data. It operates like a discreet but tireless butler, swiftly serving up the information you need, all without making a fuss. Understanding the intricacies of cache can empower you with a deeper appreciation for the seamless and speedy performance of your devices.

Image: www.pinterest.com

In essence, cache serves as a temporary high-speed storage space, meticulously curated to hold the data you’re most likely to need in the near future. This data is carefully selected from the primary storage, which is typically a slower hard drive or solid-state drive. By keeping this frequently accessed data in cache, devices can dramatically reduce the time it takes to retrieve it.

Delving into the Cache Hierarchy:

The cache system comprises several levels, each designed to operate at a specific speed and capacity. Closest to the processor resides the lightning-fast Level 1 (L1) cache, followed by the Level 2 (L2) cache, and so on. Each level boasts a larger size and latency than its predecessor. This hierarchical arrangement ensures that the most frequently utilized data is accommodated within the swifter levels, minimizing retrieval times.

Modern processors often incorporate multiple levels of cache, creating a complex hierarchy. For instance, a common configuration might consist of an L1 cache with a size ranging from 16KB to 64KB, an L2 cache spanning 512KB to 8MB, and an L3 cache boasting a size of several megabytes. This multi-level design optimizes performance by keeping commonly accessed data within the smaller, faster caches.

The Inner Workings of Cache:

Cache operates on the principles of caching algorithms, which determine the data to be stored and the manner in which it is replaced when new data arrives. The most prevalent algorithm is Least Recently Used (LRU), which prioritizes the eviction of data that has been untouched for the longest duration. By continually replacing less frequently used data with new data, the cache remains populated with the most essential information.

Moreover, caches employ various techniques to enhance efficiency. One such technique is prefetching, which proactively loads data into the cache even before it is requested. This anticipation ensures that the data is readily available when needed, reducing the latency associated with retrieving it from the primary storage.

Cache: The Unsung Hero of Computing:

Cache plays an indispensable role in the smooth operation of our devices. It invisibly accelerates everything from browsing the internet and launching applications to loading games and streaming videos. Without cache, these operations would be considerably slower, resulting in a sluggish and frustrating user experience.

Cache is particularly vital for mobile devices with limited memory and processing capabilities. It enables these devices to operate smoothly, despite their hardware constraints. By storing commonly used apps and data in cache, mobile devices can minimize the need to access the slower permanent storage, thereby conserving battery power and extending device longevity.

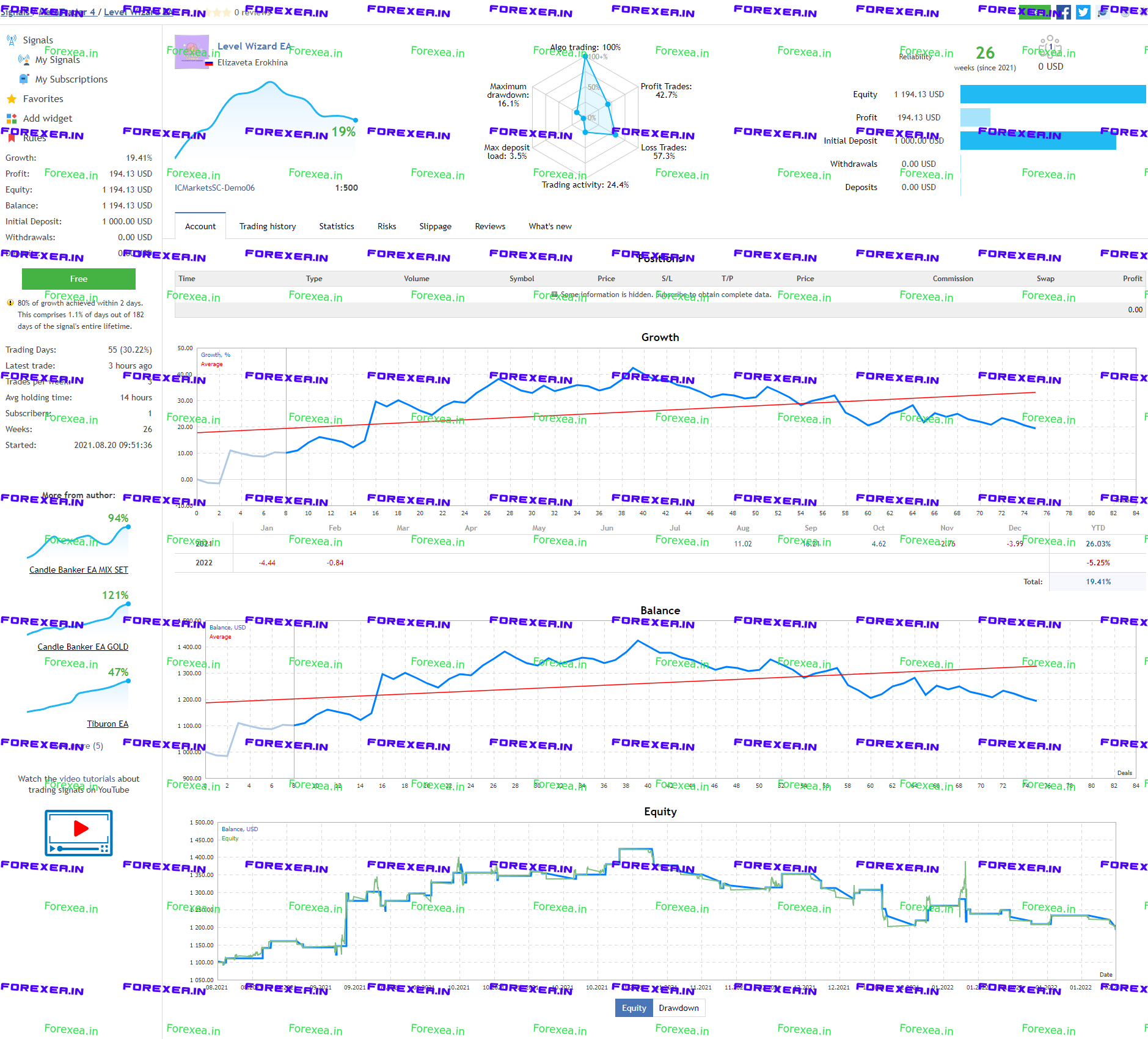

Image: www.wamc.org

Cache Http Neglected.Tk Suny Gann-Lines-Forex-Dubai-Vaji.Php

Conclusion:

Cache has evolved into an indispensable technology, silently powering the fast and efficient performance of our digital devices. Its ability to store frequently accessed data close to the processor enables lightning-fast data retrieval, enhancing our user experience and ensuring seamless operation. Cache remains a crucial component of modern computing, playing a pivotal role in the smooth functioning of our devices and the efficiency of our digital interactions.